I’m not big on creating dashboards. I find that I don’t look at them enough to warrant hosting the software on an instance and having to have the browser open to the page all the time.

Instead, I prefer to be alerted via Slack as much as possible. I wrote scripts to collect DNS records from Route53. I decided that I should expand on the idea and create a scheduled job that would execute at a time interval. This way my health checks are fully automated.

Before we get into the script, you might ask me why I don’t just use Route53 health checks! The answer is fairly simple. First, the cost of health checks for HTTPS doesn’t make sense for the number of web servers that I am testing. Second, I don’t want to test Route53 or any AWS resource from within AWS. Rather, I would like to use my own network to test as it is not connected to AWS.

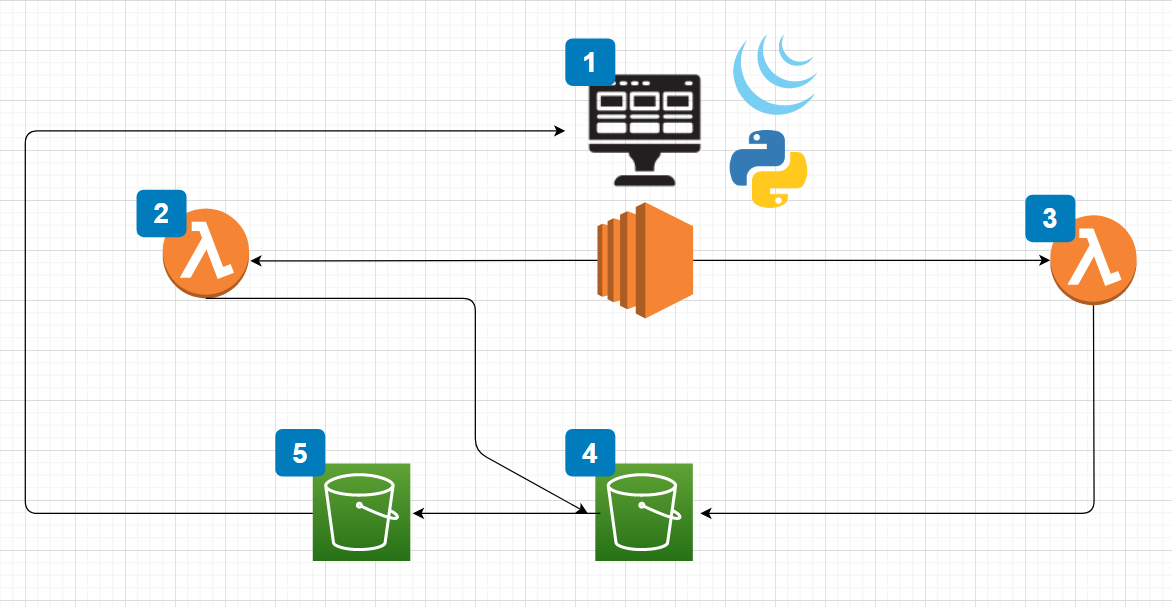

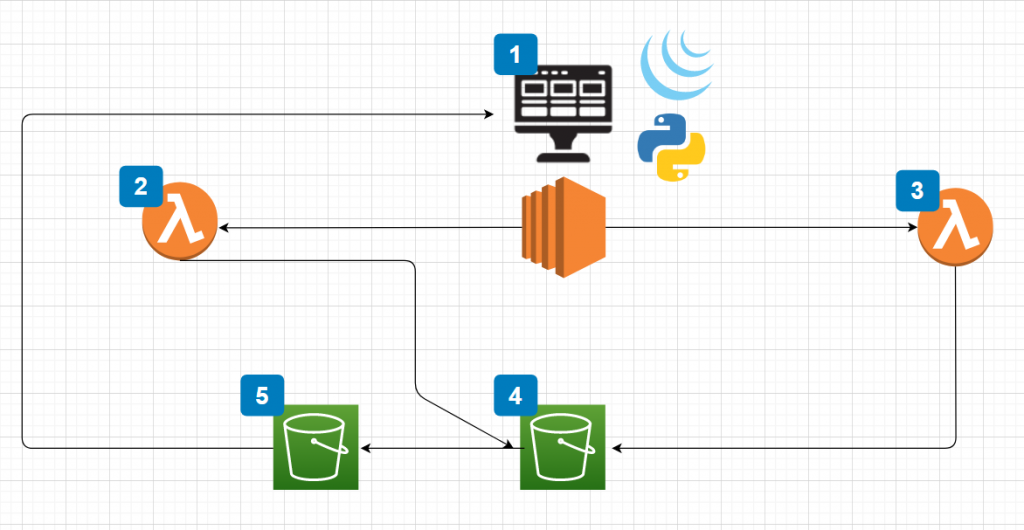

You can find the code and the Lambda function hosted on GitHub. The overall program utilizes a few different AWS products:

It also uses Slack but that is an optional piece that I will explain. The main functions reside in “main.py”. This piece of code follows the process of:

- Iterating over Route53 Records

- Filtering out “A” records and compiling a list of domains

- Testing each domain and processing the response code

- Logging all of the results to CloudWatch Logs

- Sending errors to the SNS topic

I have the script running on a CRON job every hour.

The second piece of this is the Lambda function. The function is all packaged in the “lambda_function.zip” but, I also added the function outside of the ZIP file for editing. You can modify this function to utilize your Slack credentials.

The Lambda function is subscribed to your SNS topic so that whenever a new message appears, that message is sent to your specified Slack channel.

I have plans to test my Terraform skills to automate the deployment of the Lambda function, SNS topic, CloudWatch Logs, and the primary script in some form.

If you have any comments on how I could improve this function please post a comment here or raise an issue on GitHub. If you find this script helpful in anyway feel free to share it with your friends!

Links:

Server Health Check – GitHub

Code – Main Function (main.py)

import boto3

import requests

import os

import time

#aws variables

sns = boto3.client('sns')

aws = boto3.client('route53')

cw = boto3.client('logs')

paginator = aws.get_paginator('list_resource_record_sets')

response = aws.list_hosted_zones()

hosted_zones = response['HostedZones']

time_now = int(round(time.time() * 1000))

#create empty lists

zone_id_to_test = []

dns_entries = []

zones_with_a_record = []

#Create list of ZoneID's to get record sets from

for key in hosted_zones:

zoneid = key['Id']

final_zone_id = zoneid[12:]

zone_id_to_test.append(final_zone_id)

#Create ZoneID List

def getARecord(zoneid):

for zone in zoneid:

try:

response = paginator.paginate(HostedZoneId=zone)

for record_set in response:

dns = record_set['ResourceRecordSets']

dns_entries.append(dns)

except Exception as error:

print('An Error')

print(str(error))

raise

#Get Records to test

def getCNAME(entry):

for dns_entry in entry:

for record in dns_entry:

if record['Type'] == 'A':

url = (record['Name'])

final_url = url[:-1]

zones_with_a_record.append(f"https://{final_url}")

#Send Result to SNS

def sendToSNS(messages):

message = messages

try:

send_message = sns.publish(

TargetArn='YOUR_SNS_TOPIC_ARN_HERE',

Message=message,

)

except:

print("something didn't work")

def tester(urls):

for url in urls:

try:

user_agent = {'User-agent': 'Mozilla/5.0'}

status = requests.get(url, headers = user_agent, allow_redirects=True)

code = (status.status_code)

if code == 401:

response = f"The site {url} reports status code: {code}"

writeLog(response)

elif code == 301:

response = f"The site {url} reports status code: {code}"

writeLog(response)

elif code == 302:

response = f"The site {url} reports status code: {code}"

writeLog(response)

elif code == 403:

response = f"The site {url} reports status code: {code}"

writeLog(response)

elif code !=200:

sendToSNS(f"The site {url} reports: {code}")

response = f"The site {url} reports status code: {code}"

writeLog(response)

else:

response = f"The site {url} reports status code: {code}"

writeLog(response)

except:

sendToSNS(f"The site {url} failed testing")

response = f"The site {url} reports status code: {code}"

writeLog(response)

def writeLog(message):

getToken = cw.describe_log_streams(

logGroupName='healthchecks',

)

logInfo = (getToken['logStreams'])

nextToken = logInfo[0]['uploadSequenceToken']

response = cw.put_log_events(

logGroupName='YOUR_LOG_GROUP_NAME',

logStreamName='YOUR_LOG_STREAM_NAME',

logEvents=[

{

'timestamp': time_now,

'message': message

},

],

sequenceToken=nextToken

)

#Execute

getARecord(zone_id_to_test)

getCNAME(dns_entries)

tester(zones_with_a_record)

Code: Lambda Function (lambda_function.py)

import logging

logging.basicConfig(level=logging.DEBUG)

import os

from slack import WebClient

from slack.errors import SlackApiError

slack_token = os.environ["slackBot"]

client = WebClient(token=slack_token)

def lambda_handler(event, context):

detail = event['Records'][0]['Sns']['Message']

response_string = f"{detail}"

try:

response = client.chat_postMessage(

channel="YOUR CHANNEL HERE",

text="SERVER DOWN",

blocks = [{"type": "section", "text": {"type": "plain_text", "text": response_string}}]

)

except SlackApiError as e:

assert e.response["error"]

return