Your cart is currently empty!

Author: Aaron VanSledright

Automating Proper Terraform Formatting using Git Pre-Hooks

I’ve noticed lately that a lot of Terraform is formatted differently. Some developers utilize two indents, others one indent. As long as the Terraform as functional most people overlook the formatting of their infrastructure as code files.

Personally I don’t think we should ever push messy code into our repositories. How could we solve this problem? Well, Terraform has a built in formatter theterraform fmtcommand will automatically format your code.#!/usr/bin/env bash # Initialize variables EXIT_CODE=0 AFFECTED_FILES=() # Detect OS for cross-platform compatibility OS=$(uname -s) IS_WINDOWS=false if [[ "$OS" == MINGW* ]] || [[ "$OS" == CYGWIN* ]] || [[ "$OS" == MSYS* ]]; then IS_WINDOWS=true fi # Find all .tf files - cross-platform compatible method if [ "$IS_WINDOWS" = true ]; then # For Windows using Git Bash TF_FILES=$(find . -type f -name "*.tf" -not -path "*/\\.*" | sed 's/\\/\//g') else # For Linux/Mac TF_FILES=$(find . -type f -name "*.tf" -not -path "*/\.*") fi # Check each file individually for better reporting for file in $TF_FILES; do # Get the directory of the file dir=$(dirname "$file") filename=$(basename "$file") # Run terraform fmt check on the specific file - handle both OS formats terraform -chdir="$dir" fmt -check "$filename" >/dev/null 2>&1 # If format check fails, record the file if [ $? -ne 0 ]; then AFFECTED_FILES+=("$file") EXIT_CODE=1 fi done # If any files need formatting, list them and exit with error if [ $EXIT_CODE -ne 0 ]; then echo "Error: The following Terraform files need formatting:" for file in "${AFFECTED_FILES[@]}"; do echo " - $file" done echo "" echo "Please run the following command to format these files:" echo "terraform fmt -recursive" exit 1 fi echo "All Terraform files are properly formatted" exit 0Put this code inside your “.git/hooks/” directory so that it automatically runs when someone does a push. If there is badly formatted Terraform you should see something like:

Running Terraform format check... Error: The following Terraform files need formatting: - ./main.tf Please run the following command to format these files: terraform fmt -recursive error: failed to push some refs to 'github.com:avansledright/terraform-fmt-pre-hook.git'After running the <code>terraform fmt -recursive</code> it should push successfully!

If this was helpful to your or your team please share it across your social media!

Building out a reusable Terraform framework for Flask Applications

I find myself utilizing the same architecture for deploying demo applications on the great Python library Flask. I’ve been using the same Terraform files over and over again to build out the infrastructure.

Last weekend I decided it was time to build a reusable framework for deploying these applications. So, I began building out the repository. The purpose of this repository is to give myself a jumping off point to quickly deploy applications for demonstrations or live environments.

Let’s take a look at the features:

- Customizable Environments within Terraform for managing the infrastructure across your development and production environments

- Modules for:

- Application Load Balancer

- Elastic Container registry

- Elastic Container Service

- VPC & Networking components

- Dockerfile and Docker Compose file for launching and building the application

- Demo code for the Flask application

- Automated build and deploy for the container upon code changes

This module is built for any developer who wants to get started quickly and deploy applications fast. Using this framework will allow you to speed up your development time by being able to focus solely on the application rather than the infrastructure.

Upcoming features:

- CI/CD features using either GitHub Actions or Amazon Web Services like CodePipeline and Codebuild

- Custom Domain Name support for your application

If there are other features you would like to see me add shoot me a message anytime!

Check out the repository here:

https://github.com/avansledright/terraform-flask-moduleCreate an Image Labeling Application using Artificial Intelligence

I have a PowerPoint party to go to soon. Yes you read that right. At this party everyone is required to present a short presentation about any topic they want. Last year I made a really cute presentation about a day in the life of my dog.

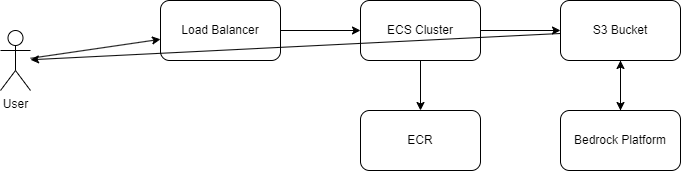

This year I have decided that I want to bore everyone to death and talk about technology, Python, Terraform and Artificial Intelligence. Specifically, I built an application that allows a user to upload an image and have it return to them a renamed file that is labeled based on the object or scene in the image.The architecture is fairly simple. We have a user connecting to a load balancer which routes traffic to our containers. The containers connect Bedrock and S3 for image.

If you want to try it out the site is hosted at https://image-labeler.vansledright.com It will be up for some time, I haven’t decided how long I will host it for but at least through this weekend!

Here is the code that interacts with Bedrock and S3 to process the image:

def process_image(): if not request.is_json: return jsonify({'error': 'Content-Type must be application/json'}), 400 data = request.json file_key = data.get('fileKey') if not file_key: return jsonify({'error': 'fileKey is required'}), 400 try: # Get the image from S3 response = s3.get_object(Bucket=app.config['S3_BUCKET_NAME'], Key=file_key) image_data = response['Body'].read() # Check if image is larger than 5MB if len(image_data) > 5 * 1024 * 1024: logger.info("File size to large. Compressing image") image_data = compress_image(image_data) # Convert image to base64 base64_image = base64.b64encode(image_data).decode('utf-8') # Prepare prompt for Claude prompt = """Please analyze the image and identify the main object or subject. Respond with just the object name in lowercase, hyphenated format. For example: 'coca-cola-can' or 'golden-retriever'.""" # Call Bedrock with Claude response = bedrock.invoke_model( modelId='anthropic.claude-3-sonnet-20240229-v1:0', body=json.dumps({ "anthropic_version": "bedrock-2023-05-31", "max_tokens": 100, "messages": [ { "role": "user", "content": [ { "type": "text", "text": prompt }, { "type": "image", "source": { "type": "base64", "media_type": response['ContentType'], "data": base64_image } } ] } ] }) ) response_body = json.loads(response['body'].read()) object_name = response_body['content'][0]['text'].strip() logging.info(f"Object found is: {object_name}") if not object_name: return jsonify({'error': 'Could not identify object in image'}), 422 # Get file extension and create new filename _, ext = os.path.splitext(unquote(file_key)) new_file_name = f"{object_name}{ext}" new_file_key = f'processed/{new_file_name}' # Copy object to new location s3.copy_object( Bucket=app.config['S3_BUCKET_NAME'], CopySource={'Bucket': app.config['S3_BUCKET_NAME'], 'Key': file_key}, Key=new_file_key ) # Generate download URL download_url = s3.generate_presigned_url( 'get_object', Params={ 'Bucket': app.config['S3_BUCKET_NAME'], 'Key': new_file_key }, ExpiresIn=3600 ) return jsonify({ 'downloadUrl': download_url, 'newFileName': new_file_name }) except json.JSONDecodeError as e: logger.error(f"Error decoding Bedrock response: {str(e)}") return jsonify({'error': 'Invalid response from AI service'}), 500 except Exception as e: logger.error(f"Error processing image: {str(e)}") return jsonify({'error': 'Error processing image'}), 500If you think this project is interesting, feel free to share it with your friends or message me if you want all of the code!

Building a Python Script to Export WordPress Posts: A Step-by-Step Database to CSV Guide

Today, I want to share a Python script I’ve been using to extract blog posts from WordPress databases. Whether you’re planning to migrate your content, create backups, or analyze your blog posts, this tool makes it straightforward to pull your content into a CSV file.

I originally created this script when I needed to analyze my blog’s content patterns, but it’s proven useful for various other purposes. Let’s dive into how you can use it yourself.

Prerequisites

Before we start, you’ll need a few things set up on your system:

- Python 3.x installed on your machine

- Access to your WordPress database credentials

- Basic familiarity with running Python scripts

Setting Up Your Environment

First, you’ll need to install the required Python packages. Open your terminal and run:

pip install mysql-connector-python pandas python-dotenvNext, create a file named

.envin your project directory. This will store your database credentials securely:DB_HOST=your_database_host DB_USERNAME=your_database_username DB_PASS=your_database_password DB_NAME=your_database_name DB_PREFIX=wp # Usually 'wp' unless you changed it during installationThe Script in Action

The script is pretty straightforward – it connects to your WordPress database, fetches all published posts, and saves them to a CSV file. Here’s what happens under the hood:

- Loads environment variables from your .env file

- Establishes a secure connection to your WordPress database

- Executes a SQL query to fetch all published posts

- Converts the results to a pandas DataFrame

- Saves everything to a CSV file named ‘wordpress_blog_posts.csv’

Running the script is as simple as:

python main.pySecurity Considerations

A quick but important note about security: never commit your .env file to version control. I’ve made this mistake before, and trust me, you don’t want your database credentials floating around in your Git history. Add .env to your .gitignore file right away.

Potential Use Cases

I wrote this script to feed my posts to AI to help with SEO optimization and also help with writing content for my other businesses. Here are some other ways I’ve found this script useful:

- Creating offline backups of blog content

- Analyzing post patterns and content strategy

- Preparing content for migration to other platforms

- Generating content reports

Room for Improvement

The script is intentionally simple, but there’s plenty of room for enhancement. You might want to add:

- Support for extracting post meta data

- Category and tag information

- Featured image URLs

- Comment data

Wrapping Up

This tool has saved me countless hours of manual work, and I hope it can do the same for you. Feel free to grab the code from my GitHub repository and adapt it to your needs. If you run into any issues or have ideas for improvements, drop a comment below.

Happy coding!

Get the code on GitHub

2024 Year in Review: A Journey Through Code and Creation

As another year wraps up, I wanted to take a moment to look back at what I’ve shared and built throughout 2024. While I might not have posted as frequently as in some previous years (like 2020’s 15 posts!), each post this year represents a significant technical exploration or project that I’m proud to have shared.

The Numbers

This year, I published 9 posts, maintaining a steady rhythm of about one post per month. April was my most productive month with 2 posts, and I managed to keep the blog active across eight different months of the year. Looking at the topics, I’ve written quite a bit about Python, Lambda functions, and building various tools and automation solutions. Security and Discord-related projects also featured prominently in my technical adventures.

Highlights and Major Projects

Looking back at my posts, a few major themes emerged:

- File Processing and Automation: I spent considerable time working with file processing systems, creating efficient workflows and sharing my experiences with different approaches to handling data at scale.

- Python Development: From Lambda functions to local tooling, Python remained a core focus of my technical work this year. I’ve shared both successes and challenges, including that Thanksgiving holiday project that consumed way more time than expected (but was totally worth it!).

- Security and Best Practices: Throughout the year, I maintained a strong focus on security considerations in development, sharing insights and implementations that prioritize robust security practices.

Community and Testing

One consistent theme in my posts has been the value of community feedback and testing. I’ve actively sought input on various projects, from interface design to data processing implementations. This collaborative approach has led to more robust solutions and better outcomes.

Looking Forward to 2025

As we head into 2025, I’m excited to increase my posting frequency while continuing to share technical insights, project experiences, and practical solutions to real-world development challenges. There are already several projects in the pipeline that I can’t wait to write about. I also hope to ride 6000 miles on my bike throughout Chicago this year.

For those interested my most popular Github repositories were:

- bedrock-poc-public

- count-s3-objects

- delete-lambda-versions

- dynamo-user-manager

- genai-photo-processor

- lex-bot-local-tester

- presigned-url-gateway

- s3-object-re-encryption

Thank You

To everyone who’s read, commented, tested, or contributed to any of the projects I’ve written about this year – thank you. Your engagement and feedback have made these posts and projects better. While this year saw fewer posts than some previous years, each one represented a significant project or learning experience that I hope provided value to readers.

Here’s to another year of coding, learning, and sharing!

Converting DrawIO Diagrams to Terraform

I’m going to start this post of by saying that I need testers. People to test this process from an interface perspective as well as a data perspective. I’m limited on the amount of test data that I have to put through the process.

With that said, I spent my Thanksgiving Holiday writing code, building this project and putting in way more time that I thought I would but boy is it cool.

If you’re like me and working in a Cloud Engineering capacity then you probably have built a DrawIO diagram at some point in your life to describe or define your AWS architecture. Then you have spent countless hours using that diagram to write your Terraform. I’ve built something that will save you those hours and get you started on your cloud journey.

Enter https://drawiototerraform.com. My new tool that allows you to convert your DrawIO AWS Architecture diagrams to Terraform just by uploading them. The process uses a combination of Python and LLM’s to identify the components in your diagram and their relationships, write the base Terraform, analyze the initial Terraform for syntax errors and ultimately test the Terraform by generating a Terraform plan.

All this is then delivered to you as a ZIP file for you to review, modify and ultimately deploy to your environment. By no means is it perfect yet and that is why I am looking for people to test the platform.

If you, or someone you know, is interested in helping me test have them reach out to me on through the website’s support page and I will get them some free credits so that they can test out the platform with their own diagrams.

If you are interested in learning more about the project in any capacity do not hesitate to reach out to me at anytime.

Website: https://drawiototerraform.com

API For Pre-signed URLs

Pre-signed URL’s are used for downloading objects from AWS S3 buckets. I’ve used them many times in the past for various reasons but this idea was a new one. A proof of concept for an API that would create the pre-signed URL and return it to the user.

This solution utilizes an API Gateway and an AWS Lambda function. The API Gateway takes two parameters “key” and “expiration”. Ultimately, you could add another parameter for “bucket” if you wanted the gateway to be able to get objects from multiple buckets.

I used Terraform to create the infrastructure and Python to program the Lambda.

Take a look at the Lambda code below:

import boto3 import json import os from botocore.exceptions import ClientError def lambda_handler(event, context): # Get the query parameters query_params = event.get('queryStringParameters', {}) if not query_params or 'key' not in query_params: return { 'statusCode': 400, 'body': json.dumps({'error': 'Missing required parameter: key'}) } object_key = query_params['key'] expiration = int(query_params.get('expiration', 3600)) # Default 1 hour # Initialize S3 client s3_client = boto3.client('s3') bucket_name = os.environ['BUCKET_NAME'] try: # Generate presigned URL url = s3_client.generate_presigned_url( 'get_object', Params={ 'Bucket': bucket_name, 'Key': object_key }, ExpiresIn=expiration ) return { 'statusCode': 200, 'headers': { 'Access-Control-Allow-Origin': '*', 'Content-Type': 'application/json' }, 'body': json.dumps({ 'url': url, 'expires_in': expiration }) } except ClientError as e: return { 'statusCode': 500, 'body': json.dumps({'error': str(e)}) }The Terraform will also output a Postman collection JSON file so that you can immediately import it for testing. If this code and pattern is useful for you check it out on my GitHub below.

Adding a reminder feature to Discord

As many of you know, I released my Discord Bot Framework the other week. This has prompted me to put sometime into developing new features for the bot’s I manage.

I’ve always been a fan of the “remind” functionality that many Reddit communities have. In my Discord, we are often sending media content that I want to watch but don’t want to forget about. Hence the need for the command “!remind”. Surprisingly this was pretty simple code. Take a look below for the functionality I created.

@client.command(name='remind') async def remind(ctx, duration: str): try: # Parse the duration (e.g., "10s" for 10 seconds, "5m" for 5 minutes, "1h" for 1 hour) time_units = {'s': 1, 'm': 60, 'h': 3600} time_value = int(duration[:-1]) time_unit = duration[-1] if time_unit not in time_units: await ctx.send("Invalid time format! Use s (seconds), m (minutes), or h (hours).") return delay = time_value * time_units[time_unit] # Check if the command is a reply to another message if ctx.message.reference and ctx.message.reference.resolved: # Get the replied message replied_message = ctx.message.reference.resolved message_link = f"https://discord.com/channels/{ctx.guild.id}/{ctx.channel.id}/{replied_message.id}" else: await ctx.send("Please reply to a message you want to be reminded about.") return await ctx.send(f"Okay, I will remind you in {duration} to check out the message you referenced: {message_link}") # Wait for the specified duration await asyncio.sleep(delay) # Send a reminder message with the link to the original message await ctx.send(f"{ctx.author.mention}, it's time to check out your message! Here is the link: {message_link}") except ValueError: await ctx.send("Invalid time format! Use a number followed by s (seconds), m (minutes), or h (hours).")Feel free to utilize this code and add it to your own Discord bot! As always, if this code is helpful please share it with your friends or on your own social media!

The Discord Bot Framework

I’m happy to announce the release of my Discord Bot Framework. A tool that I’ve spent a considerable amount of time working on to help people build and deploy Discord Bots quickly within AWS.

Let me first start off by saying I’ve never released a product. I’ve run a service business and I’m a consultant but I’ve never been a product developer. This release marks my first codebase that I’ve packaged and put together for developers and hobbyists to utilize.

So let’s talk about what this framework does. First and foremost it is not a fully working bot. There are pre-requisites that you must accomplish. The framework holds some example code for commands and message context responses which should be enough to get any Python developer started on building their bot. The framework also includes all of the required Terraform to deploy the bot within AWS.

When you launch the Terraform it will build a Docker image for you and deploy that image to ECR as well as launch the container within AWS Fargate. All of this lives behind a load balancer so that you can scale your bot’s resources as needed although I haven’t seen a Discord bot ever require that many resources!

I plan on supporting this project personally and providing support via email for the time being for anyone who purchases the framework.

Roadmap:

– GitHub Actions template for CI/CD

– More Bot example code for commands

– Bolt on packages for new functionalityI hope that this framework helps people get started on building bots for Discord. If you have any questions feel free to reach out to me at anytime!

Product Name Detection with AWS Bedrock & Anthropic Claude

Well, my AWS bill me a bit larger than normal this month due to testing this script. I thoroughly enjoy utilizing Generative AI to do work for me and I had some spare time to tackle this problem this week.

A client sent me a bunch of product images that were not named properly. All of the files were named something like “IMG_123.jpeg”. There was 63 total files so I decided rather than going through them one by one I would see if I could get one of Anthropic’s models to handle it for me and low and behold it was very successful!

I scripted out the workflow in Python and utilized AWS Bedrock’s platform to execute the interactions with the Claude 3 Haiku model. Take a look at the code below to see how this was executed.

if __name__ == "__main__": print("Processing images") files = os.listdir("photos") print(len(files)) for file in files: if file.endswith(".jpeg"): print(f"Sending {file} to Bedrock") with open(f"photos/{file}", "rb") as photo: prompt = f""" Looking at the image included, find and return the name of the product. Rules: 1. Return only the product name that has been determined. 2. Do not include any other text in your response like "the product determined..." """ model_response = bedrock_actions.converse( prompt, image_format="jpeg", encoded_image=photo.read(), max_tokens="2000", temperature=.01, top_p=0.999 ) print(model_response['output']) product_name = modify_product_name(model_response['output']['message']['content'][0]['text']) photo.close() if os.system(f"cp photos/{file} renamed_photos/{product_name}.jpeg") != 0: print("failed to move file") else: os.system(f"mv photos/{file} finished/{file}") sys.exit(0)The code will loop through all the files in a folder called “photos” passing each one to Bedrock and getting a response. There was a lot of characters that were returned that would either break the script or that are just not needed so I also wrote a function to handle those.

Ultimately, the script will copy the photo to a file named after the product and then move the original file into a folder called “finished”.

I’ve uploaded the code to GitHub and you can utilize it however you want!