Do you love or hate Jenkins? I feel like a lot of the DevOps world has issue with it but, this post and system could easily be modified to any CI/CD tool.

One thing I do not enjoy about Jenkins is reading through its logs and trying to find out why my pipelines have failed. Because of this I decided this is a perfect use case for an AI to come in and find the problem and present possible solutions for me. I schemed up this architecture:

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐

│ Jenkins │ │ API Gateway │ │ Ingestion │

│ (Shared Lib) │────▶│ /webhook │────▶│ Lambda │

└─────────────────┘ └─────────────────┘ └────────┬────────┘

│

▼

┌─────────────────┐ ┌─────────────────┐ ┌─────────────────┐

│ SQS Queue │◀────│ Analyzer │◀────│ SQS Queue │

│ (Dispatcher) │ │ Lambda │ │ (Analyzer) │

│ + DLQ │ │ (Bedrock) │ │ + DLQ │

└────────┬────────┘ └─────────────────┘ └─────────────────┘

│

▼

┌─────────────────┐ ┌─────────────────┐

│ Dispatcher │────▶│ SNS Topic │────▶ Email/Slack/etc.

│ Lambda │ │ (Notifications) │

└─────────────────┘ └─────────────────┘A simple explanation is that when a pipeline fails we are going to send the logs to an AI and it will send us the reasoning as to why the failure occurred as well as possible troubleshooting steps.

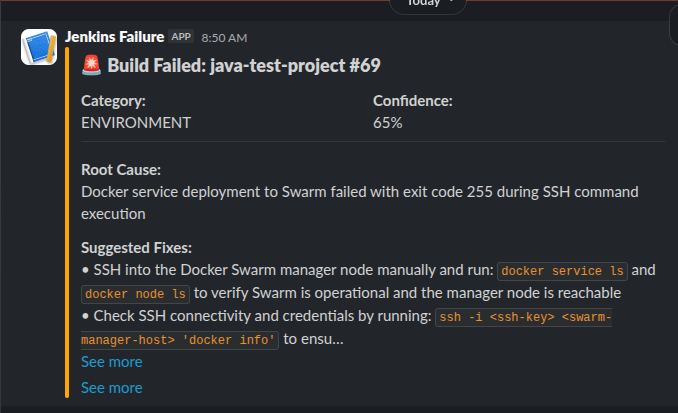

Fine. This isn’t that interesting. It saves time which is awesome. Here is a sample output into my Slack:

This failure is because I shutdown my Docker Swarm as I migrated to K3s.

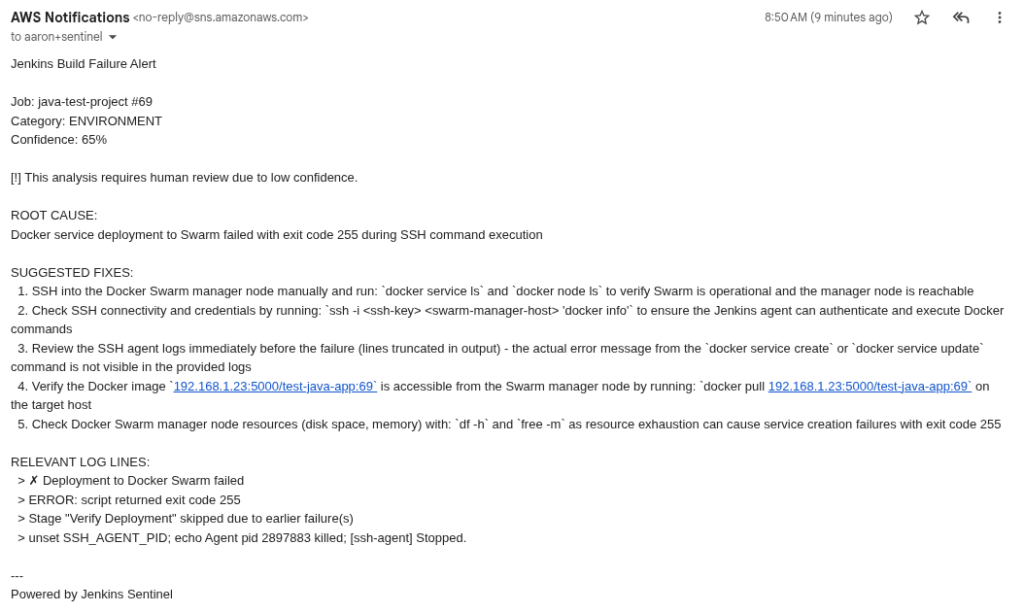

Here is the same alert via email from SNS:

So why build this? Well, this weekend I worked on adding “memory” to this whole process in preparation of two things:

- MCP Server

- Troubleshooting Runbook(s)

Jenkins already has an MCP server that works great in Claude Code. You can use it to query jobs, get logs, have Claude Code troubleshoot, resolve and redeploy.

Unless you provide Claude Code with ample context about your deployment, its architecture and the application it might not do a great job fixing the problem. Or, it might change some architecture or pattern that is not to your organization or personal standards. This is where my thoughts about adding memory to this process comes in.

If we add a data store to the overall process and log an incident, give it unique identifiers we can begin to have patterns and ultimately help the LLM make better decisions about solving problems within pipelines.

Example:

{

"PK": "FP#3315b888564167f2f72185c51b3c433b6bfa79e7b0e4f734e9fe46fe0df2d8c6",

"SK": "INC#66a6660f-6745-468f-b516-41c51b8d0ecf",

"build_number": 69,

"category": "environment",

"confidence_score": 0.65,

"created_at": "2026-02-09T14:50:26.322599+00:00",

"fingerprint": "3315b888564167f2f72185c51b3c433b6bfa79e7b0e4f734e9fe46fe0df2d8c6",

"incident_id": "66a6660f-6745-468f-b516-41c51b8d0ecf",

"job_name": "java-test-project",

"relevant_log_lines": [

"✗ Deployment to Docker Swarm failed",

"ERROR: script returned exit code 255",

"Stage \"Verify Deployment\" skipped due to earlier failure(s)",

"unset SSH_AGENT_PID; echo Agent pid 2897883 killed; [ssh-agent] Stopped."

],

"requires_human_review": true,

"reviewed_by": null,

"root_cause": "Docker service deployment to Swarm failed with exit code 255 during SSH command execution",

"status": "suspected",

"suggested_fixes": [

"SSH into the Docker Swarm manager node manually and run: `docker service ls` and `docker node ls` to verify Swarm is operational and the manager node is reachable",

"Check SSH connectivity and credentials by running: `ssh -i <ssh-key> <swarm-manager-host> 'docker info'` to ensure the Jenkins agent can authenticate and execute Docker commands",

"Review the SSH agent logs immediately before the failure (lines truncated in output) - the actual error message from the `docker service create` or `docker service update` command is not visible in the provided logs",

"Verify the Docker image `192.168.1.23:5000/test-java-app:69` is accessible from the Swarm manager node by running: `docker pull 192.168.1.23:5000/test-java-app:69` on the target host",

"Check Docker Swarm manager node resources (disk space, memory) with: `df -h` and `free -m` as resource exhaustion can cause service creation failures with exit code 255"

],

"updated_at": "2026-02-09T14:50:26.322599+00:00"

}This is the log from the same incident I showed before. The same information now stored. So, now, if we hook up an MCP to our AI and ask it to find patterns we get something like this:

Here's the summary of your 18 incidents across 7 jobs, all from today and yesterday:

---

Category Breakdown

┌───────────────┬───────┬─────┐

│ Category │ Count │ % │

├───────────────┼───────┼─────┤

│ environment │ 11 │ 61% │

├───────────────┼───────┼─────┤

│ configuration │ 6 │ 33% │

├───────────────┼───────┼─────┤

│ code │ 1 │ 6% │

└───────────────┴───────┴─────┘

Most Common: environment (11 incidents)

The dominant failure pattern is Kubernetes deployment rollout timeouts — pods failing to become ready after kubectl apply or

kubectl rollout status. This hits nearly every job:

- accounting-cloud (4 incidents) — rollout timeout + health check timeout

- oovoo-javer-bot (1) — rollout timeout, also noted k3ks-3 node is NotReady

- dividend-tracker (3) — rollout timeout + health check wait timeout

- aws-cost-optimizer (1) — rollout timeout

- Password Generator (1) — rollout timeout

- homelab-proxy (1) — deployment timeout

The root cause is consistently the same: pods don't start in time. The AI analysis flags likely causes as ImagePullBackOff,

CrashLoopBackOff, resource constraints, and the NotReady node (k3ks-3).

Second Most Common: configuration (6 incidents)

These are more varied:

- oovoo-javer-bot (3) — missing Jenkins credential oovoo-javer-bot-env, missing K8s secret oovoo-javer-bot-secrets, empty

withCredentials block

- homelab-proxy (2) — Traefik CRD API version mismatch (traefik.containo.us/v1alpha1 vs traefik.io/v1alpha1)

- dividend-tracker (1) — PVC resize forbidden (can't shrink PVC below current capacity)

- food-cost-app-pipeline (1) — Terraform state lock held by another process

Key Takeaway

Your k3s cluster is the bottleneck. 11 of 18 failures are pods not starting in time. The k3ks-3 NotReady node suggests a

cluster health issue that's likely causing scheduling failures or resource pressure across all your deployments. Fixing that

node (or removing it from the cluster) would likely resolve the majority of these incidents.

Ignore the fact that many of these are the same error. I did a lot of this on purpose.

What you SHOULD imagine is how does this run inside your environment(s) and what data would you collect. If you think about it, you should realize you would find the bottlenecks of your own deployments. You would find the spots where your developers are getting stuck. You can then create solutions to those issues and hopefully reduce that trend line.

Next steps.

We need a human in the loop element. I’m going to start crafting a web interface where these issues are presented to a human engineer. That engineer could add notes or better steps for resolution. With that data added into the memory the troubleshooting agent can follow best practices of your organization or home lab.

So, stay tuned for the web interface. If you’re interested in setting this up for yourself shoot me a message and I’ll give you access to the repository.