I had a client approach me regarding a set of files they had. The files were a set of certificates to support their products. They deliver these files to customers in the sales process.

The workflow currently involves manually packaging the files up into a deliverable format. The client asked me to automate this process across their thousands of documents.

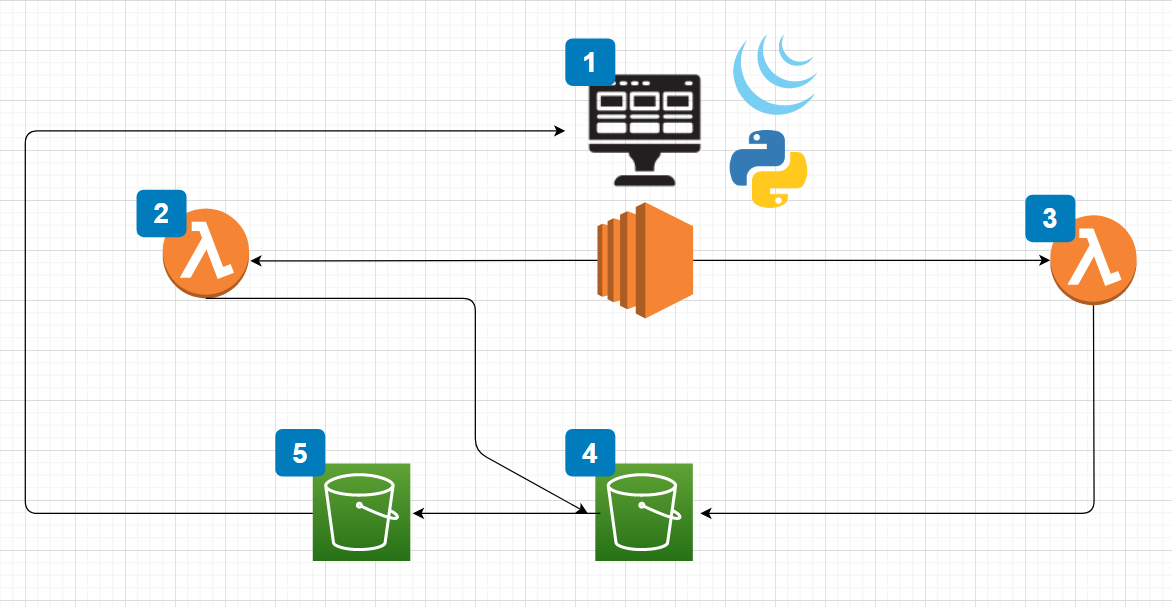

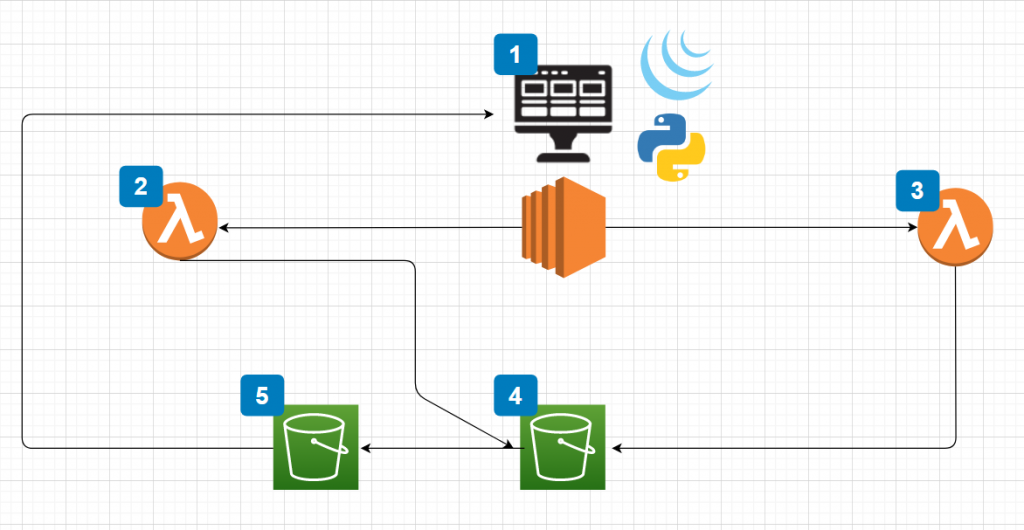

As I started thinking through how this would work, I decided to create a serverless approach utilizing Amazon S3 for document storage and Lambda to do the processing and Amazon S3 and Cloudfront to generate a front end for the application.

My current architecture involves two S3 buckets. One bucket to store the original PDF documents and one to pull in the documents that we are going to package up for the client before sending.

The idea is that we can tag each PDF file with its appropriate lot number supplied by the client. I will then use a simple form submission process to supply input into the function that will collect the required documents.

Here is the code for the web frontend:

<!DOCTYPE html>

<html>

<head>

<script src="https://ajax.googleapis.com/ajax/libs/jquery/2.2.4/jquery.min.js"></script>

<script type="text/javascript">

$(document).ready(function() {

$("#submit").click(function(e) {

e.preventDefault();

var lot = $("#lot").val();

$.ajax({

type: "POST",

url: 'API_URLHERE',

contentType: 'application/json',

data: JSON.stringify({

'body': lot,

}),

success: function(res){

$('#form-response').text('Query Was processed.');

},

error: function(){

$('#form-response').text('Error.');

}

});

})

});

</script>

</head>

<body>

<form>

<label for="lot">Lot</label>

<input id="lot">

<button id="submit">Submit</button>

</form>

<div id="form-response"></div>

</body>

</html>

This is a single field input form that sends a string to my Lambda function. Once the string is received we will convert it into a JSON object and then use that to find our objects within Amazon S3.

Here is the function:

import boto3

import json

def lambda_handler(event, context):

form_response = event['body']

tag_list = json.loads(form_response)

print(tag_list)

tag_we_want = tag_list['body']

s3 = boto3.client('s3')

bucket = "source_bucket"

destBucket = "destination_bucket"

download_list = []

#get all the objects in a bucket

get_objects = s3.list_objects(

Bucket= bucket,

)

object_list = get_objects['Contents']

object_keys = []

for object in object_list:

object_keys.append(object['Key'])

object_tags = []

for key in object_keys:

object_key = s3.get_object_tagging(

Bucket= bucket,

Key=key,

)

object_tags.append(

{

'Key': key,

'tags': object_key['TagSet'][0]['Value']

}

)

for tag in object_tags:

if tag['tags'] == tag_we_want:

object_name = tag['Key']

s3.copy_object(

Bucket= destBucket,

CopySource= {

'Bucket': bucket,

'Key': object_name,

},

Key= object_name,

)

download_list.append(object_name)

return download_list, tag_we_want

In this code, we define our source and destination buckets first. With the string from the form submission, we first gather all the objects within the bucket and then iterate over each object to find matching tags.

Once we gather the files we want for our customers we then transfer these files to a new bucket. I return the list of files out of the function as well as the tag name.

My next step is to package all the files required into a ZIP file for downloading. I first attempted to do this in Lambda but quickly realized you cannot use Lambda to generate files as the file system is read only.

Right now, I am thinking of utilizing Docker to spawn a worker which will generate the ZIP file, place it back into the bucket and provide a time-sensitive download link to the client.

Stay tuned for more updates on this project.