So I was wrong in last weeks post! Our playoffs started this week. In my league all the teams go to the playoffs and if you lose then there is a loser’s bracket.

Our AI run team was seeded at number 3. We were in a three way tie for first place and we ended the “regular season” with 2054.76 points. The leader had 2114.12. So, we weren’t far off the front!

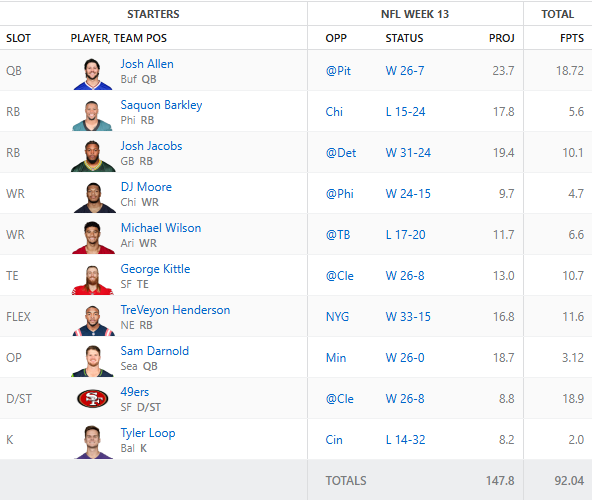

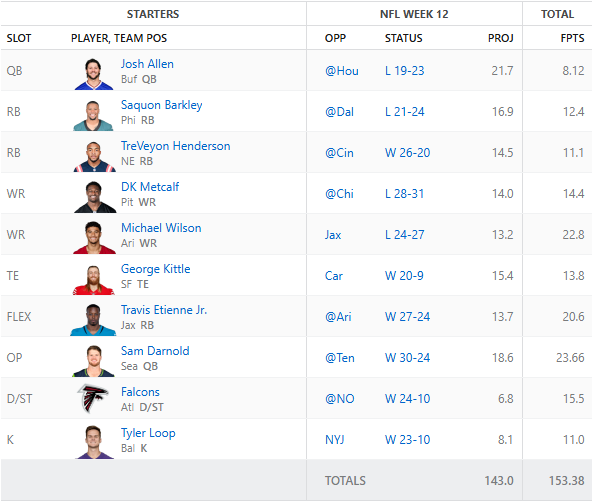

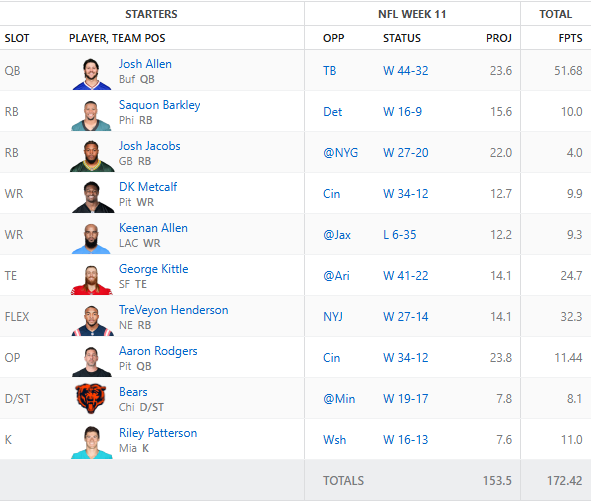

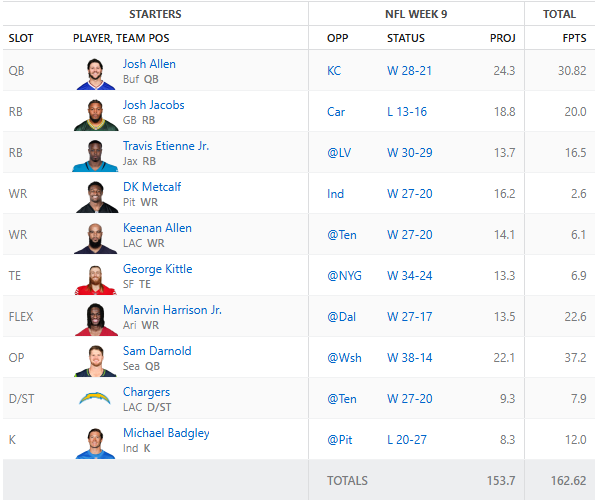

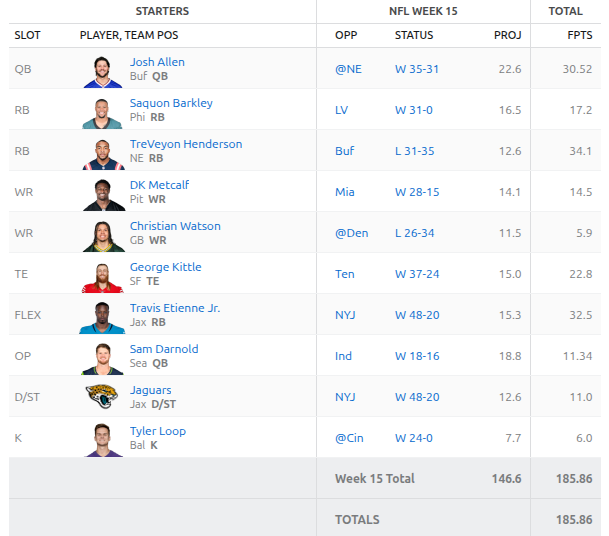

Anyway, enough of that. You all just want to know the outcome. Here is our point totals from our first round in the playoffs:

I ran the AI on Saturday and it suggested pulling out Josh Jacobs in favor of TreVeyon Henderson. This ended up getting us an extra 10 points. Josh Jacobs still put up 24.2 points this week. Everyone played really well this week except Sam Darnold. I’m not sure if his hot streak is over or what is going on with him but its been rough. Christian Watson took a nasty hit in his game and left early but he is expected to be just fine.

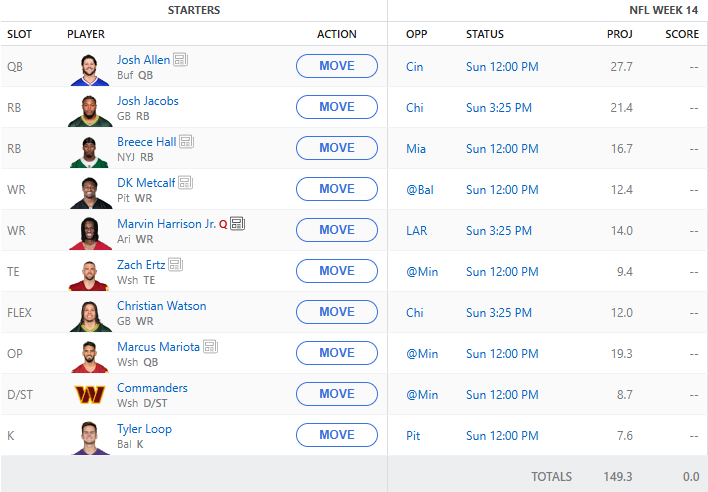

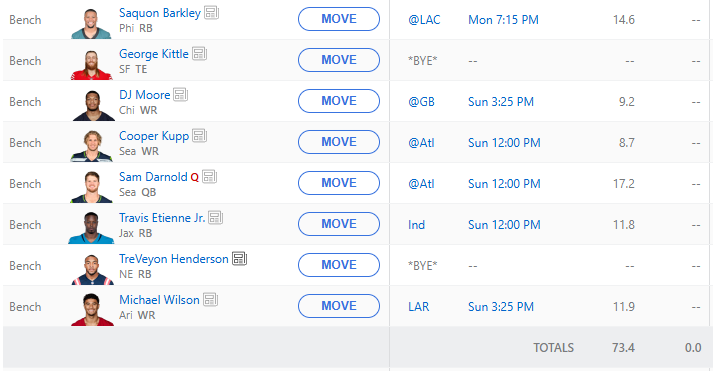

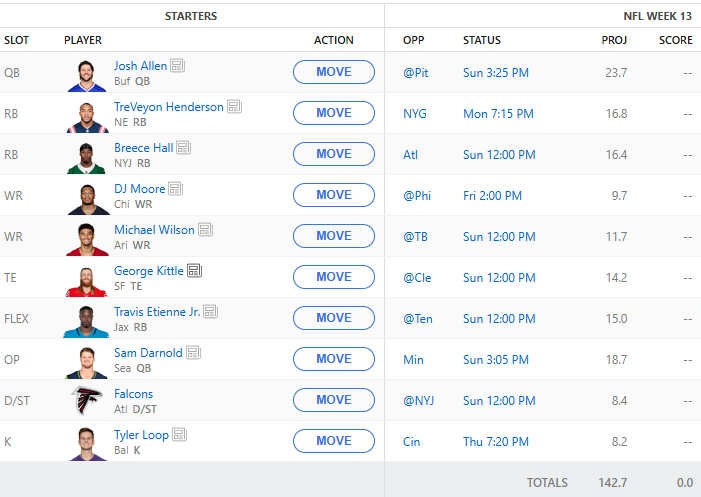

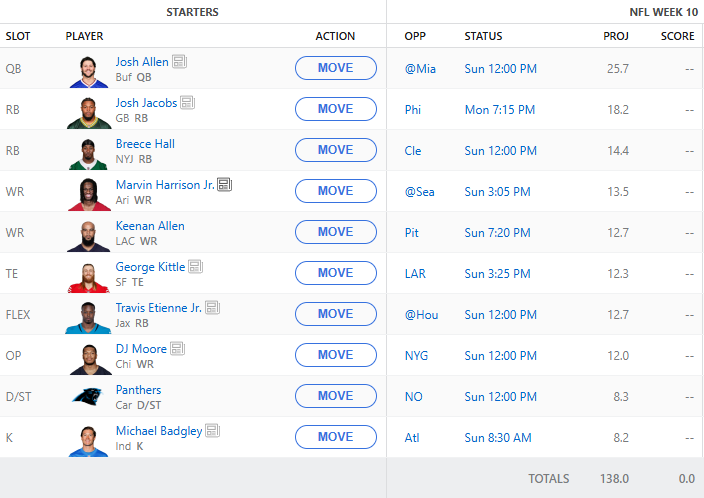

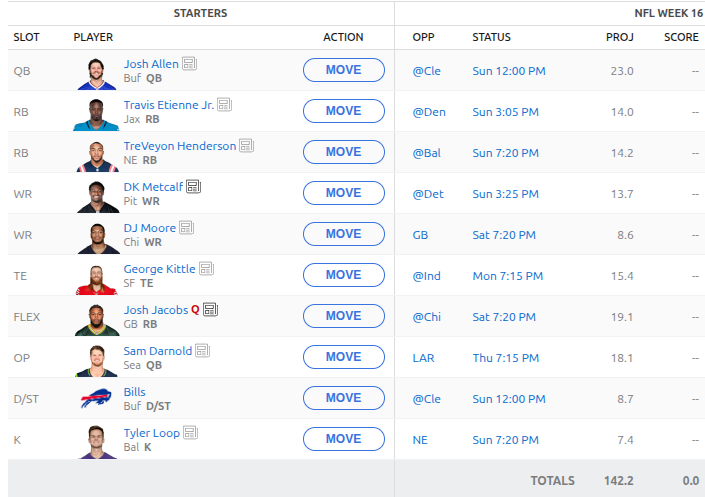

So, did we win? We sure did! We’re on to the next round of the playoffs and we’re going to be up against Jahmyr Gibbs so we have to hope for our best performance of the season next week. Here is the currently proposed roster:

We have a lot of injuries and questionable players so I expect this to change. We picked up the Bills defense as they play Cleveland and they should have a good time against that struggling offense.

As we look to the off season I hope to build up my API website https://gridirondata.com and start training the model that we will use for next year. I have been working on the overall workflow and looking into how I can have both the scrapers running in the cloud and in my homelab so that I can easily work with the data locally and not incur a lot of cloud cost.

Stay tuned for more Fantasy Football news next week!