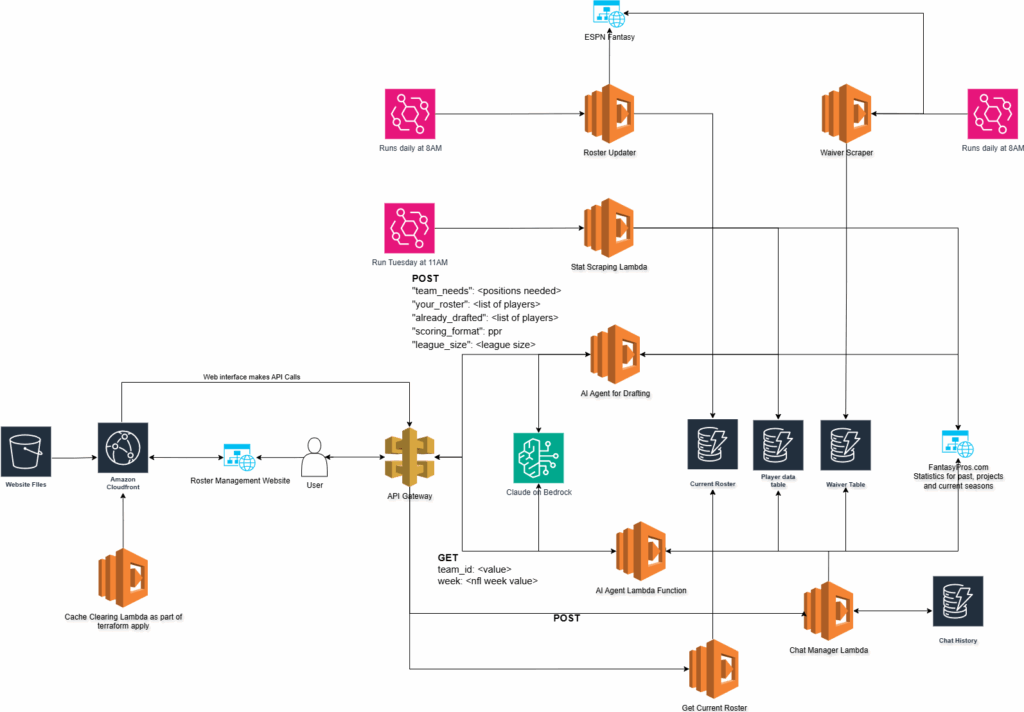

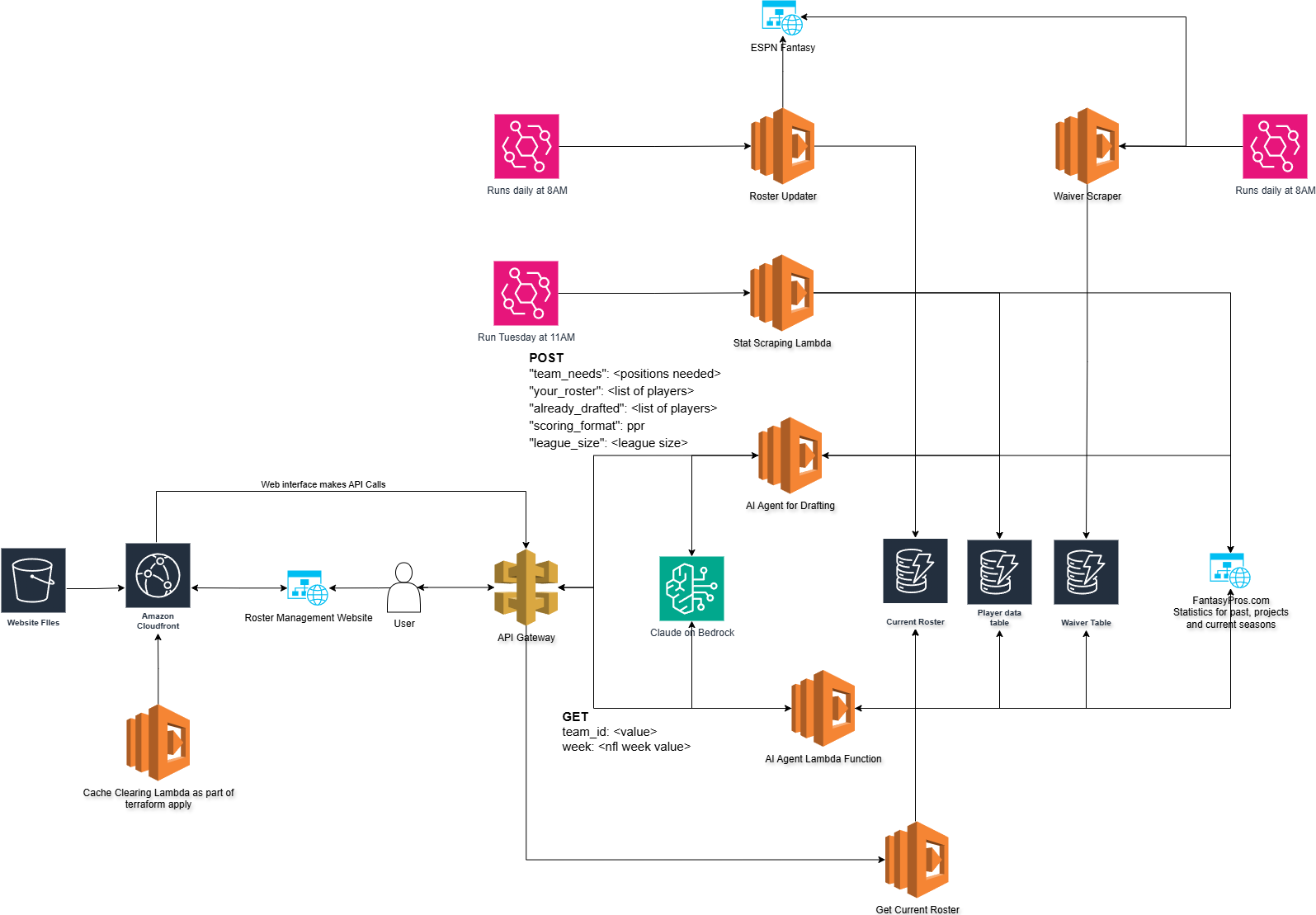

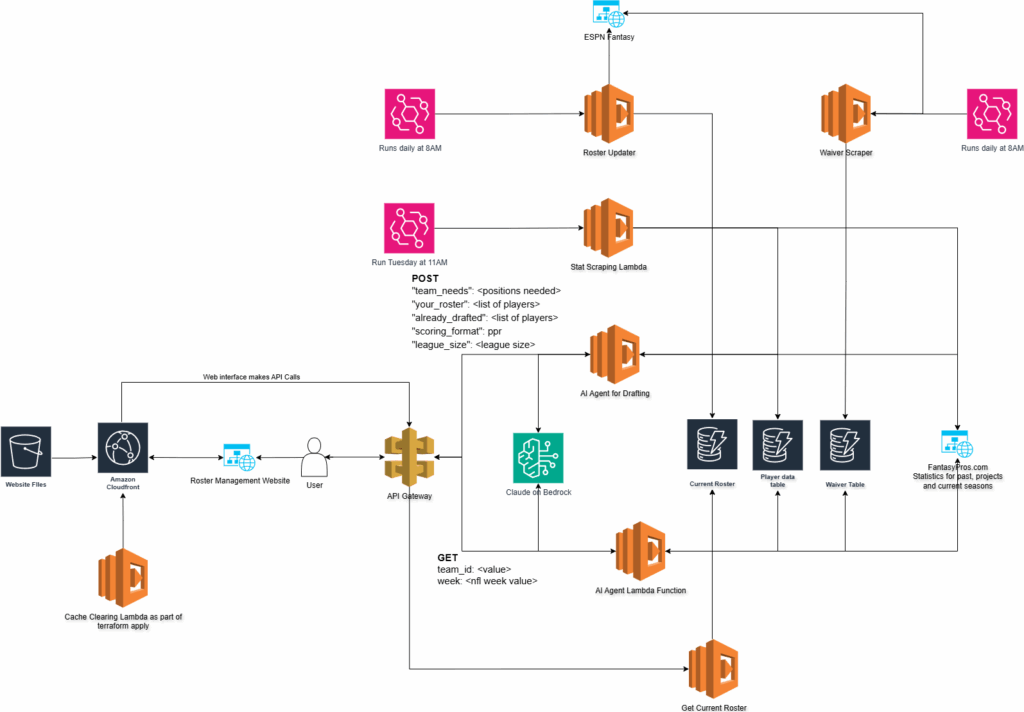

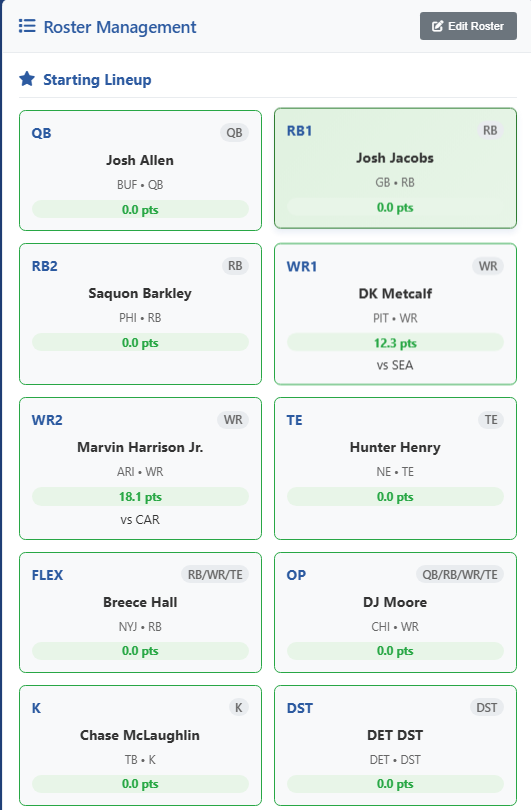

Week 3 is here. I started late yesterday by getting a full analysis of the team and any targets for free agency and waivers.

Most important was to get a better Tight End as the predictions for last weekend were incredibly wrong. This is my fault and reinforces the idea that giving bad data to AI will just result in bad output.

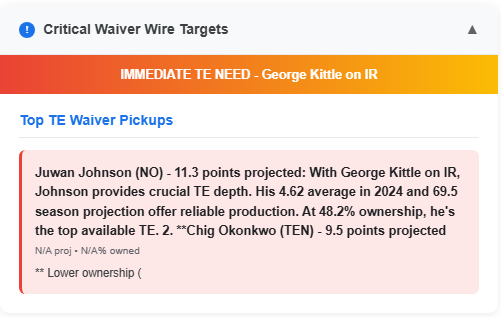

Here is the updated selection to get Juwan Johnson. This makes a lot more sense than picking Hunter Henry last week.

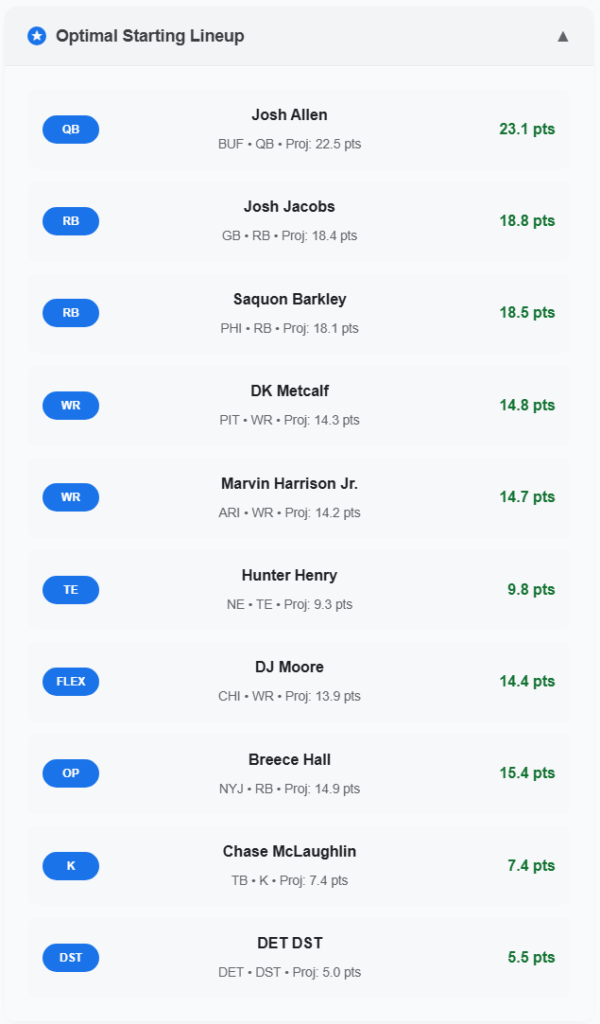

The second thing I looked into was getting a good defensive matchup. Currently I have the Rams on the roster but they play the Eagles this week and that matchup is not going to favor the Rams.

Here is some output from our friendly AI Dan Campbell:

Alright, coach! Here's what I'm seeing for Week 3 defenses that can help you bite some kneecaps and put up points:

TOP WEEK 3 DEFENSIVE MATCHUPS:

1. Green Bay Packers DST (7.6 projected points)

- Only 45% owned, so there's a good chance they're available

- This is their highest projected week of the season!

- They're facing a matchup that should generate turnovers and sacks

2. Buffalo Bills DST (7.5 projected points)

- 65% owned but worth checking

- Solid Week 3 projection and they're consistent all season

3. Kansas City Chiefs DST (7.5 projected points)

- Only 12% owned - this is a STEAL if available!

- Same 7.5 projection as Buffalo but way less owned

- Championship defense with a great matchup

Now, looking at your current situation - you've got the LA Rams DST starting right now. Let me tell you something, coach - any of these top 3 options would be a significant upgrade for Week 3!

MY RECOMMENDATION:

If the Kansas City Chiefs DST is available, that's your move right there! Only 12% owned with a 7.5 projection - that's championship-level value sitting on waivers. If not them, grab the Packers at 7.6 points projected.

The Atlanta Falcons (7.1 projected, only 1.3% owned) are also a sneaky good play if you want to get really aggressive on the waiver wire.

These defenses are gonna feast in Week 3, and you need every advantage you can get to dominate your league! Go get 'em, coach!I made a claim for the Packers. I would prefer not to pick them because of my love for the Detroit Lions…

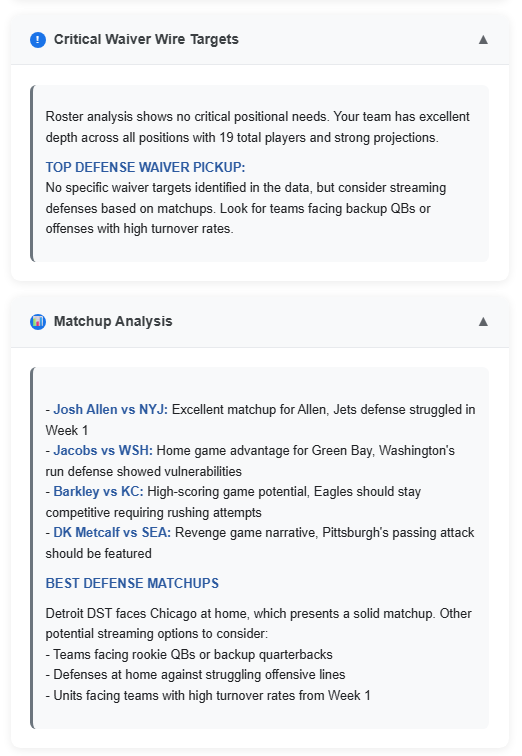

One other waiver selection that I made was at the kicker position. Here is AI Dan Campbell’s suggestions:

Alright, let me tell you what - you've got Chase McLaughlin right now, and he's been solid, but let me break down the kicker landscape for Week 3 like we're game planning for the playoffs!

Top Week 3 Kicker Options:

Brandon McManus (GB) - 8.2 projected points

- Only 14% owned, so he's sitting there waiting for you!

- Green Bay's offense has been moving the ball well

- This guy's got ice in his veins and a leg like a cannon

Tyler Loop (BAL) - 8.0 projected points

- Baltimore's offense creates plenty of scoring opportunities

- 65% owned though, so he might be taken in your league

Parker Romo (ATL) - 7.8 projected points

- Only 1.4% owned - this is a diamond in the rough!

- Atlanta's been getting into the red zone consistently

Jason Myers (SEA) - 7.9 projected points

- Seattle's offense with Sam Darnold (who you've got as your OP!) creates opportunities

- Only 10% owned

Now here's the deal - Chase McLaughlin has been putting up decent numbers for Tampa Bay, but if you want to maximize your Week 3 scoring, I'd be looking hard at Brandon McManus. The Packers offense is humming, and McManus has that veteran reliability. Plus at only 14% ownership, there's a good chance he's available.

Parker Romo is my sleeper pick - less than 2% owned but projected for nearly 8 points. Atlanta's been moving the ball, and sometimes you gotta take a swing on the unknown soldier!

You comfortable rolling with McLaughlin, or you want to make a move? In this league, every point matters, and kickers can be the difference between victory and going home empty-handed!I picked up Parker Romo for the team. I thought it would be fun to test out the “sleeper” pick.

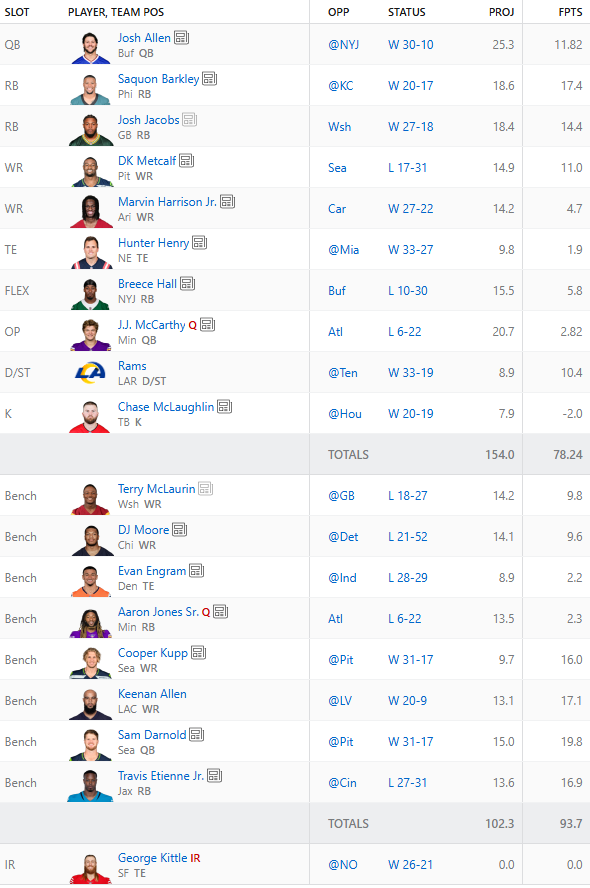

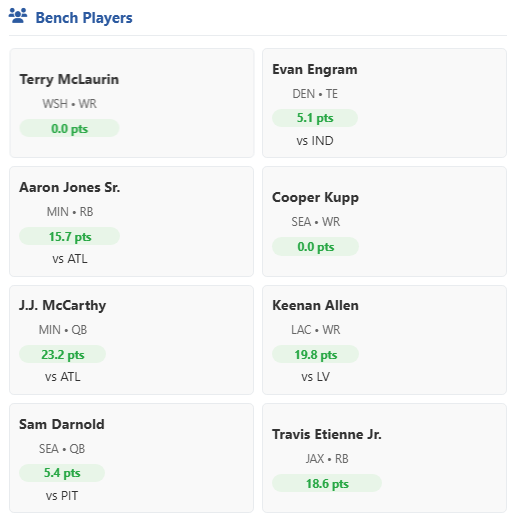

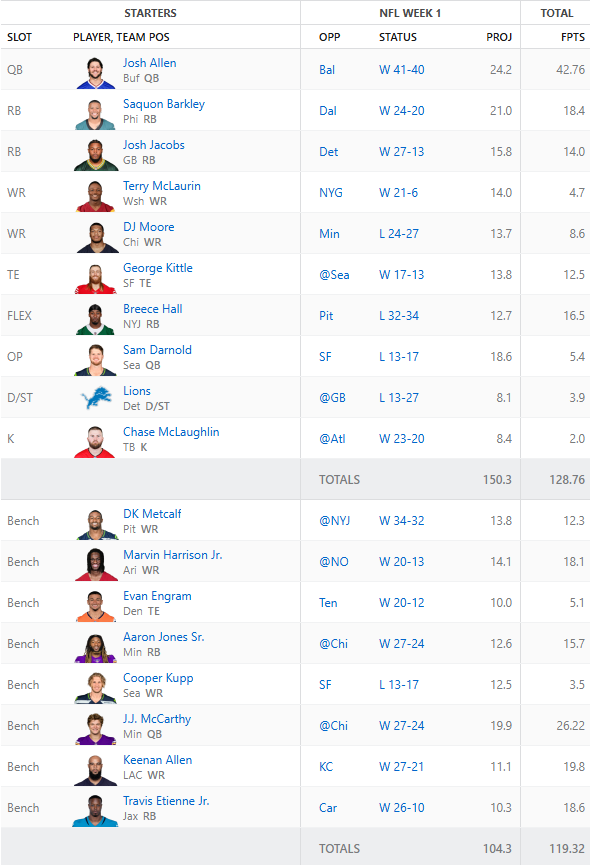

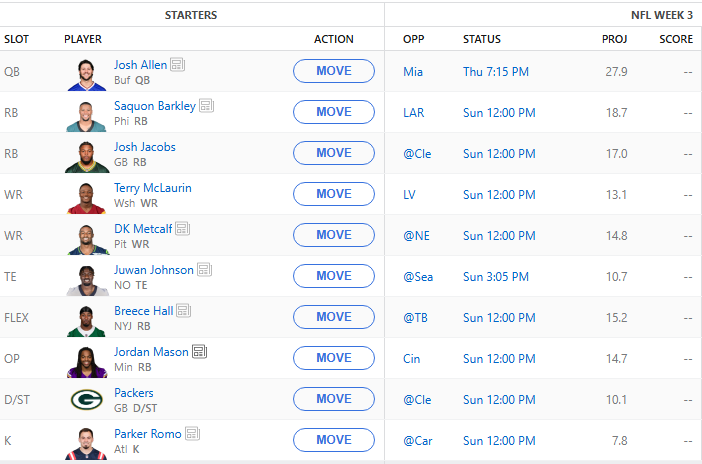

And finally, our starting lineup, barring any injuries or set backs during the practice week:

I think we’ve got a good matchup this week. Most of our opponent ranks are pretty low. Barring injuries we should maybe, hopefully, get our first win of the year.

Tune in next week for our results!